Restoring 8mm Home Movies

My family, like many families in the days before video cameras, captured snippets of family history four minutes at a time on 8mm reels of film. Being a younger member of a large family, many of my memories of early family history were formed while watching the old films on an old Bell & Howell projector during the semi-annual home movie nights.

Around the time I was eleven or twelve, about junior high, I got interested in making animated films, partly inspired by Terry Gilliam's crazy animations scattered about various Monty Python episodes. Scraping together about twelve dollars for film and developing, I could make a movie with my brother or with friends. These were largely unplanned productions, figured out after the film had been bought, the film loaded, and the "talent" assembled. "OK, what should we do now?" was a common question. We didn't have a tripod, lighting was poor, and the results were, uh, "organic" (like dung is organic), but were still a lot of fun to make.

In the summer of 2010, in my mother's basement I came across the box containing all the family movies. It had been many years since I had seen them. Years before a couple of my siblings had had the films transferred to VHS, but I had lost the tape. Film transfer technology has gotten much better in the intervening years, so I decided then to have them recaptured.

This page is a summary of what I learned about it, and how I went about getting a good quality restoration. I'm not saying this is the best way; it is just the way I did it and if you are considering doing something like this yourself, maybe you can learn from my missteps. This summary is hardly comprehensive. Here is a good write up of many of the issues.

Added 2019: Note that this web page was written 2011, immediately after finishing up the film conversion project. I have not worked on it or thought much about it since. I am contacted, at least monthly, by someone asking if I will convert films for them. To head off more of that: sorry, the answer is no, I don't do that; I already have a full-time job and not enough free time.

Others ask for me to recommend a place to do the conversion. Because I haven't looked at all at this sector in 8 years, I really have no useful opinion. I'm sorry I can't be more help, but this article already contains all the things I knew when I was somewhat up to date.

Interesting links on the same topic:

Transfer Technology

Realtime transfer

Back in VHS days, one common method of transferring films to tape was quite simple: the film would be projected on a screen, and a video camera would film the screen and capture the image in real time. Such transfers didn't require much more than what any camera store already had on hand, and so many stores offered such services. In fact, one can do this at home.

One problem is that the frame rate of films are different than the frame rate of video. For instance, regular 8mm film is typically 16 fps, super 8mm films is typically 18 fps, and video is 30 fps (really 29.97 if you want to nitpick). It is very possible to get strobing effects from the mismatch in frame rate. Another problem is that most projectors don't evenly light the frame: the center is brighter than the edges, producing a "hot spot" effect. If a standard projector is used, the film gate (a piece of metal with a rectangular hole in the center which the film is projected through) will block out all the image near the edges of each frame, as much as 20% of the image. If either the projector or the camera filming the image aren't perpendicular to the projection surface, image distortion may result.

There are slightly better units dedicated to the job that can remove some of these problems, but they still suffer from the problem of unsynchronized frame rate conversion:

These days the image is captured to DVD instead of VHS, but there are the same fundamental flaws as before. Do not under any circumstance settle for this option, no matter how cheap.

Frame by Frame transfer

The next step up is "frame by frame" transfer. In this setup, a modified projector advances the film by one frame, and an image sensor mounted directly in front of the lens of the projector captures one frame and the data is transferred to a computer. Then the film is advanced to the next frame, and the process repeats, typically doing ten or so frames per second. By synchronizing the display of each frame to the capture of each frame, there is no flicker induced by having some frames captured while the film gate is closed or only partially open.

In addition to the inherently better capture, software on the computer can perform image processing to remove defects like scratches, do color balance correction, and set light levels. Up-converting from the film's natural frame rate to videos 30 fps can be done in an intelligent way to minimize the distortions that may occur.

This approach is more expensive than real time transfer, but the quality is worth it. With good construction and careful operation, frame by frame capture can rival the quality obtained by high end scanning equipment (described next). However, nothing is guaranteed; some frame by frame equipment is not well made, or the operator of the equipment might be careless or incompetent.

Here are a few links to people who make this type of equipment, some just for personal use. Note that I have no experience with them and are just representative of the type of technology discussed in this section. I will note that Fred Van de Putte has produced some stunning results with his home made equipment:

- Fred Van de Putte's telecine equipment, also see his videos on how he built his system, part 1 (hardware), and part 2 (capturing). View the other videos on his youtube channel to see how he has restored a few films using his capture system and avisynth for postprocessing.

- Workprinter-XP

- Construction of a home made Telecine machine

- Tobin Cinema Systems, Inc.

High End Film Scanning

There are specially built machines for transferring film to digital format; these machines were first used by high end video production companies to transfer theatrical movies to digital format for broadcasters to use. For example, the Movie Channel doesn't actually broadcast old movies from film. The word you should use when doing searches on the subject is "telecine."

Like the "frame by frame" capture just described, film scanners also capture images a frame at a time, but in a more sophisticated way. Rather than taking a snapshot of each frame, like a camera, they scan the film a line at a time or even a pixel at a time. At first blush this sounds like a step backwards, but rather than needing to capture one million pixels in one flash, film scanners can afford to use a very high quality sensor because they need only one, or one row's worth. Such equipment is invariably part of a suite of hardware dedicated to image processing, with trained operators to adjust lighting and correct color.

When discussing color correction, there are a few terms to learn. The best quality, and most expensive, is "scene by scene" correction. The operator will adjust lighting and color correction for each scene, and will ensure consistency in the colors between different scenes. "Best light" means the colorist will make corrections for each scene, often on the fly, but with less attention to consistency. "One light", the cheapest approach, means the colorist will make a best compromise that seems to work across all scenes; in reality, if there is a dramatic shift in lighting or color, the colorist will likely rewind and readjust at that point.

Another advantage of film scanners is they advance the film continuously, rather than using the typical sprocket advance and register scheme. This won't make a difference for some films, but many old films have bad splices and sprocket holes that are broken or "chewed on" and won't feed reliably through a sprocket mechanism. Some old films can even shrink, leading to bad sprocket hole spacing. The film scanner software has a means of registering each frame without relying on sprocket holes to do the job.

Having been around for a long time, these high end film scanners are now getting cheap enough for use in capturing home movies. Some services use old high end equipment from the 1990s; others use state of the art equipment, but you pay for it. Either way, the quality is likely to be a cut above frame by frame capture equipment. To process 8mm film, these machines need custom adapters, since they were designed for 16mm and 35mm work.

Rank Cintel scanners were the top end scanners, but the Spirit DataCine 4K now seems to be the (very expensive) top end. As far as I know, nobody does 8mm film transfers using Spirit-grade hardware.

Transfer Format

No matter which capture scheme is used, eventually you need to decide in what form do you want your digitized video to be returned to you.

All such places offer to transfer you films directly to DVD. They can add titles and even atmospheric music if you desire it. It is possible to grab the video off of these DVDs for subsequent editing, but it is already heavily compress MPEG2 video, and isn't convenient for editing. If you don't want to mess with editing or pressing your own DVDs, then by all means, get your capture done directly to DVD.

Another option is to have the film transferred to a DV video tape; these tapes are digital format, and require some means of transferring the bits from the tape into your PC for further editing. DV files are reasonable for editing, as they offer frame level access with moderate compression.

As I wanted maximum control over the end result, I opted to have my video captured and saved as a video files, one per film. I mailed a portable hard drive to the capture house and they returned my film as uncompressed AVI files. Uncompressed video results in huge files, but the advantage is that there are no compression artifacts to worry about, and editing at an arbitrary frame level is no problem.

As a comparison, a standard definition MPEG 2 video found on a DVD is typically encoded at 3.5 to 8.0 Mbit/s; DV is 25 Mbit/s; uncompressed video is around 240 Mbit/s.

If you opt for a file-based transfer on a hard disk, there are more questions to be answered. What resolution should the film be captured? What video container format should be used? What type of code should be used to put the video in the file? Should the film be captured with pulldown? Interlaced or progressive format? Some of these subjects (such as choice of codec) require their own web page, but here is a very, very brief summary of some of these considerations.

There is a temptation to capture the film at the highest offered resolution. It does cost more, though, and processing the results is a lot slower than capturing at standard definition resolution (720x480). Keep in mind that 8mm frames are quite small (less than 8mm!) and don't inherently contain more than a certain amount of information. Getting 1920x1080 capture of such film will just give you very clear pictures of the film grain. Personally, going higher than 1024x768 is overkill for 8mm.

As was mentioned, the native film rate is 16 fps or 18 fps for 8mm film, 24 fps for 16 mm film, and 30 fps for video. One way to convert the native film frame rate to the video frame rate is to duplicate some frames in the video stream. That is OK for playback, but it makes processing the video difficult. Ask the transfer house to give you one frame of video for each frame on the film. You can do frame rate conversion after you have done all of your film editing and enhancement.

TV has historically presented video in interlaced format, presented at 29.97 fps (in North America). However, film itself is inherently progressive. Deinterlacing software can be used to convert interlaced back to progressive format. If you have a choice, stick with progressive scan, as it makes subsequent image processing tasks easier.

If you use an Apple Mac computer for editing, you probably want to use quicktime MOV format as the file container. Using the ProRes HQ codec will give you the highest quality transfer, as it doesn't compress the data much -- only subsampling the chroma by a factor of two.

If you use a PC, you'll probably want to use an AVI file container. For a codec, you should avoid anything that applies a lot of compression. Highly compressed files are relatively small, but leads to quality loss and difficulty in editing. You want to save compression to the very end of your chain of editing tools. MJPG (motion jpeg) is a fine format, as it doesn't have any form of interframe compression, allowing for easy access to arbitrary frame boundaries. There are some lossless compressors, like huffyuv and lagarith. Apple ProRes video is also known as v210 by its fourcc code, and there are PC codecs for reading this uncompressed format.

No matter which format you pick, you should first ask for a small (like 10 frame) sample of film encoded in that format from your transfer house so you can verify that you are able to open it and recover the images. It would be terrible to spend $600 capturing a bunch of movies only to find that you can't read them.

Cleaning

Old films can be dusty, or have an accumulation of grime from a lifetime of handling. Old films often have suffered scratches; which side of the film is scratched makes a difference.

Cheaper transfers simply load your old film and go. The next step up is to have the film "dusted" by mechanically passing it between brushes to try and knock off any dust or dirt. Better quality cleaning has the film pass between two sponges wetted with a cleaning solution; the solution is more active than water, but not so much that it will damage the film.

There is also something called a "wet gate" (or "wetgate") transfer. To explain it, we need some background information. The actual film emulsion is on side of the plastic backing. If the scratch is on the emulsion side, it often shows up as a blue streak in the film; the film is truly damaged and this can't be fixed during the capture (although it can be fixed in post-processing). If the scratch is on the backing side, it shows up as a dark scratch when projected. The wetgate process means the film is coated with a liquid which has an index of refraction matching that of the plastic; this liquid fills in the valley formed by a scratch, and the film emulsion can then be captured properly. It might sounds like hokum, but it really works. Below is a youtube video showing the effect on a particularly badly scratched film.

Cost

In general, quality costs money. Real time transfer will be the least expensive, frame-by-frame capture more expensive, and film scanning will be more expensive yet. There is a large variation in pricing, and the cost per foot drops if you have a large order. However, to give you some estimate, real time capture can be $4-$8 for a 50 foot reel of 8mm film; older scanning equipment can run $15-$25 for a 50 foot reel. I've seen quotes as high as $40 per 50 foot reel; as I didn't opt for that service, I don't know if it delivers superior results or is simply more expensive.

In addition to the cost of the transfer, usually based on the number of feet of film, there are often other charges. There can be preparation charge to repair bad splices or bad leaders. The higher end transfer equipment is very expensive to operate and they don't want to waste a lot of time messing around with 50 ft. film reels. Instead, all of your reels get joined together on a single 400 ft. reel which can then be efficiently processed on the expensive scanning equipment. There can be a charge for cleaning the film, and a charge for transferring the result onto DVD, tape, or hard drive. Capturing sound films is more expensive than silent films. Some places charge a fixed overhead per job, perhaps to scare away the small orders.

I recently had about 35 reels of silent 8mm film captured at standard def resolution, saved to .avi files. In fact, I had it done twice. The first time was at a local video transfer place using frame by frame capture. In total, it was about $300. The nominal price was $7.50 per 50 foot roll, but there were some other fees that raised the total.

After struggling to improve the quality in post-processing, I bit the bullet and had it all redone at Cinepost. Due to the volume of films processed, the cost was a very reasonable $15 per 50 ft. reel, plus some other reasonable overhead expenses. In total it was about $700, but, as you'll see, it was well worth it. Cinepost uses Cintel film scanners with some custom hardware that allows them to handle 8mm film. The turn around time was about a week.

Image Comparison

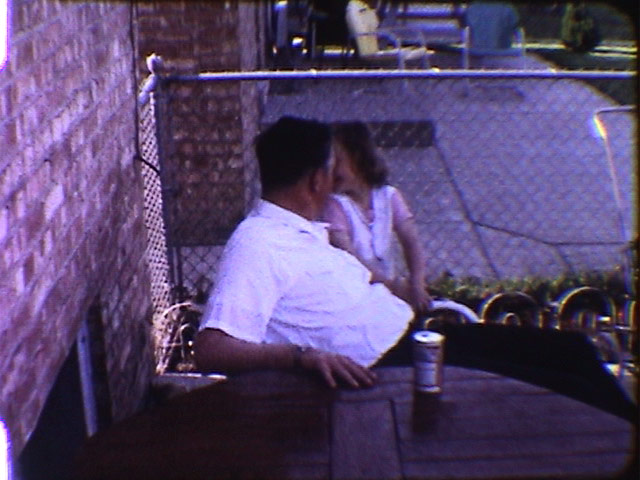

I don't have a lot of experience, in fact, just two data points. But I wanted to share what I got back from the mom & pop video service I used (frame-by-frame capture) vs. when I went to a professional telecine service (Cinepost). Or to put it another way, what does 15¢/foot processing give you vs. 30¢/foot. These are the corresponding frames of the same film from the two captures. Note that alignment isn't exactly the same. Note too that I had asked both to do a wide capture including the sprocket holes; normally they crop the video so the sprocket hole doesn't show up. I prefer to the cropping myself.

Capture from a cheap frame-by-frame service:

Capture using a Rank Cintel film scanner, without post-processing, done at Cinepost:

It should be stated that normally Cinepost does post processing on the image after capture, but I requested that it not be done, as I wanted to do it all myself. Normally, Cinepost would return better looking results than this.

The most obvious difference vs. the cheap capture is that, even in this raw state, the colors are much more natural. Look too at the bright reflection off the stroller handle on the right edge of the frame: the cheaper capture bleeds brightness into the area next to the handle. The Cinepost capture has much more uniform lighting. Look at the yellow and green chairs at the top of the frame: the image is much crisper; this is evident too in the wires of the chain link fence.

There is something else that is instructive in this capture. Look at the color of the light coming through the sprocket holes on the left side of the frame. In the cheap capture, it is white light; in the professionally done one, the illuminating light color as well as intensity is chosen to compensate for any color shifts of the aging film.

Image Enhancement

Many people receive captures like the first one shown on a DVD; that is that and they just live with the poor quality. Much can be done to improve the quality in post processing, but it is always best to start with the best capture you can.

vReveal

First I tried a piece of software named vReveal, made by a company named MotionDSP. MotionDSP makes some very expensive software for forensic video restoration. vReveal is a consumer version of it, but with many fewer knobs, at a very reasonable price point (US$39 as of January, 2011). There are only a few knobs for controlling what algorithms are applied to the image, such as motion stabilization, contrast adjustment, fill lighting, sharpening, etc. What it does do, though, it generally does well. They have a free version you can download and use, but it adds a small vReveal watermark on the processed video.

Here is a same Cinepost-captured frame as above after vReveal has enhanced it:

One of the things vReveal doesn't do is allow changing the settings during a movie. This can be worked around by chopping a film into one small video per scene, then tweaking the settings for each scene, and pasting the results back together, but that would be more than tedious. Also, some of the knobs are too coarse; for example, the "clean" function is either "off," "on," or "high." Whatever its shortcomings, though, it couldn't be easier to use.

Avisynth

I probably could have used vReveal on my films and been done quickly. However, I like to tinker, so I took a different approach, one populated with pitfalls and fraught with frustration.

Avisynth is an open source software product that runs only under Microsoft Windows. It has no commercial support, development advances in fits and starts, and despite a healthy user community, you will need to figure out a lot of things through trial and error.

Normally if a file is named something like foo.avi and you open it under windows, it knows how to decode the AVI container format. Inside are streams of data for video and audio, each of which must be decoded using the codec specific to that stream type. The data is processed by the codec and fed back to the application as a raw stream of pixels or audio samples, which are then displayed or what have you.

Avisynth is not an application as much as it is a driver. Conventionally, avisynth files have a suffix of ".AVS". Existing video for windows-aware applications can open .avs files and display the video. The strange thing is that .avs files don't contain any video! Instead, they are scripts that describe how to generate video. When an application opens the file and asks for frame 0, the avisynth driver reads the script, figures out how to generate frame zero, then feeds the decoded video to the application, which thinks the video had simply been read from a file.

There are plenty of tutorials around that describe how to use avisynth, but here is a small example to give a flavor of what it looks like.

film = "myvideo.avi"

src = avisource(film).ConvertToRGB32().crop(100,100,400,300)

scenes = src.trim(622,894) ++ src.trim(100,434)

smaller = scenes.Lanczos4Resize(320,240)

brighter = smaller.tweak(bright=30, sat=1.2, cont=1.1)

brighter

This (untested) script opens the file named "myvideo.avi", converts it to a 32-bit RGB pixel format, and selects a 400x300 square of pixels from each frame offset from the top by 100 pixels and the left by 100 pixels. Next it extracts two scenes, the first from frame 622 to 894, and the second from frame 100 to 434. Notice that the order of the scenes has been reversed from the original. Avisynth is a non-linear editor, meaning it isn't constrained to process frames in the order they appear in the file. These two scenes are then resized to be 320x240 pixels, its brightness is boosted by 30 units, color saturation is enhanced by 20%, and the contrast is boosted by 10%. The result is returned from the script. There are many ways to write this script, as the syntax is somewhat flexible.

One of the strengths of avisynth is that it has an open architecture where new filters (processing algorithms) can be created by anybody and plugged into the avisynth framework as seamlessly as if they had been part of the original program. One of the weaknesses of avisynth is there are myriad filters, some good, some bad, some compatible, some broken, and it takes a lot of time to figure out what plays well together. It is also daunting that in effect it is a tool with 10,000 knobs, a potentially paralyzing number of options.

Avisynth can do other things than just video processing. It can overlay videos, merge and process audio, change frame rates, change video formats, do titling, display analysis data, and more.

The thing that helped me tremendously was a script written by a user of avisynth, Fred Van de Putte. Fred not only built his own telecine equipment, he has invested hundreds of hours tweaking scripts to achieve the best possible results. Even so, each film requires its own knob tuning, so the script doesn't make things nearly as easy as vReveal does. The script and extensive dialog about using it can be found in one thread of a forum where things like this are discussed. The very first post in the thread is kept up to date with the latest version of Fred's script, and there are links to some of his results, which are well worth viewing to see just how high quality 8mm film can be after restoration.

Here is the same frame as before, this time restored using Fred's script:

As compared to the vReveal results, note that the colors are more lifelike and the image is sharper. There are other benefits as well, but this one frame can't show them all.

Restoration Details

Again, this thread is the best place to read up on Fred's enhancement script, but this section will give an overview of what it does. The steps are performed in the order listed here:

Stabilize

All of my movies were filmed with a handheld camera, which resulted in substantial amounts of shake and uneven panning. The script uses some sophisticated algorithms to detect what part of the scene is background (that is, unchanging) image and what parts are moving objects. This can be tricky considering that when the camera pans, in some naive sense everything is moving relative to where it was in the previous frame. With this information, the script looks at a sequence of frames and adjusts each one's position to smooth out any short-term jitter or shake and to smooth the trajectory of intentional pans.

Crop

The film needs to be cropped to just the essential portions at this point. There are two reasons why cropping is needed. First, the original capture may have caught some of the border around the frame of the film and maybe some sprocket holes. Second, when the motion stabilization step, above, shifts each frame to smooth out the image, one or two edges of the image must be filled in with something to make up for the part of the image which was shifted into the frame (an equal amount on the opposite side was shifted out of the frame).

Clean

Even if the film was cleaned before capture, various specks of dirt might still adhere to the frame, or bits of emulsion might have flaked off years before, resulting in splotches that appear for one frame and then disappear again. This step does something very sophisticated to fix these problems. For each frame, it compares the corresponding parts of the frame to the frames immediately before and after it. If it finds something which looks like dirt (of a certain size, of a too bright or too dark color) which appears for a single frame, it can repair cover up that spot by borrowing the image from the corresponding place from the adjacent frames.

This is a little bit tricky as objects move from frame to frame. If a white ball moves swiftly across a scene, it takes some intelligence to tell that it is really one moving object and not a series of white specks that happen in a different place in successive frames. Sometimes the heuristics that protect against things like this don't work and small, rapidly moving objects seem to disappear! Thankfully it is rare, and can usually be fixed up by adjusting the strength of the cleaning algorithm.

Here is a short clip showing how cleaning and denoising (next) can remove the frame gunk. Notice the blotch on the upper right part of the statue pedestal is removed, and the smudges near the middle top part of the frame are gone. The right side is notably sharper due to the way the image processing script was written, but isn't an inherent part of dirt removal.

(click on the image to see a short video clip)

(this clip of the 1939 World's Fair is from the Prelinger Archives)

Denoise

Denoising is different than cleaning, but it operates on some of the same principles that the cleaner does. Film grain contributes a certain amount of noise to the image. By comparing a series of adjacent frames, the algorithm identifies the corresponding point of an object in each of the frames. It can then do some intelligent averaging to discern what portion of the signal is due to the inherent attributes of the object being filmed and what is just contributed by film grain, so that film grain can be reduced. Removing too much grain causes the film to lose some of its character, and can lose fine detail, resulting in a plastic look.

Sharpen

Some of the previous steps tend to soften the image somewhat; this step applies a filter to restore some of the sharpness, paying special attention to the edges of objects where sharp transitions are the most noticeable. Like anything, sharpening can be overdone. Sharpening amplifies noise, and sharp edges can produce ghost images near the edge, called "ringing."

Frame rate conversion

Here is the point where the native frame rate of the film, be it 16 fps, 18 fps, or something else, gets converted to 30 fps. Playing a 16 fps source at 30 fps would make everything happen too quickly. One approach is to duplicate frames until the right speed is achieved. For instance, say one second of film is represented by this sequence of 16 frames, named "A" to "P":

A B C D E F G H I J K L M N O P

can be turned into these thirty frames via duplication such that playback at 30fps takes one second, and the speed of the movie is unchanged:

A A B B C C D D E E F F G G H I I J J K K L L M M N N O O P

Each frame was duplicated, except for frame "H" and "P", otherwise we would have ended up with 32 frames. Because some frames are duplicated and others aren't, the result will be somewhat uneven speed, a slight stuttering effect. Even if every frame is is duplicated the same number of times, the resulting video is more choppy than what video normally looks like, and is especially noticeable during panning.

Another approach is to go ahead and duplicate all 16 frames, resulting in 32, and then playing it at 30 fps. The motion will be about 6% too slow, but will be more smooth.

Somewhat more sophisticated, it is possible to blend adjacent frames, weighting the contribution of each based on how close it is in time to the frame which is being synthesized. For instance, if we were simply trying to double the frame rate, we could average together frames A and B producing frame A', and then play them back in A,A',B order.

Avisynth offers another option still, the most sophisticated of them all. Often it gives stunning results, but it can also produce noticeable artifacts and occasionally comically bad results. Avisynth can actually analyze each frame and discern objects from other objects based on how they move in relation to the background and each other, and how they occlude other objects. The tool can create a synthetic image part way between two frames in a physically plausible manner. Imagine someone standing still but raising their arm. The blending approach would produce an intermediate frame showing a ghost of the arm in both the before and after positions. Frame interpolation would try to create an image with the arm in a half way between position. Keep in mind that the tool is just shifting around pixels and has no a priori understanding of objects, nor any understanding that the objects are originate from 3D objects projected onto a 2D image. Sometimes the heuristics can't figure out what to do, and it falls back on just doing a blending operation. Objects sometimes have a "halo" of blurred image around moving objects where it has synthesized some background image around a moving object.

Here is an extreme example: three girls are playing ring around the rosie, and the film has been slowed down by a factor of 100. That is, for each pair of original frames, 99 intermediate frames are synthesized and placed between the two real frames. The left portion is generated via frame duplication: each frame is simply repeated 100 times in a row. In the middle is frame blending: notice how the left edge of the white dress fades in to advance. On the right is interpolation using sophisticated motion analysis: the dress moves to advance; on the other hand, the motion of the girl's legs is less convincing.

The same thing is presented below, but running at real time. This conversion just changes the video from 15 fps to 30 fps. The stuttering effect isn't all that strong in this clip, but it is visible when viewed closely. The frame blending in the middle produces a slightly blurry image for things in motion. The motion interpolation on the right looks the best of the three.

Color and levels adjustment

Finally, here is the place where the brightness, contrast, and gamma can be twiddled, and the color corrected. The script has options for attempting to do this automatically, or one can just leave it unchanged and use another tool for adjusting these details.

Finished Product

Here is about a minute of the finished product. This is from Thanksgiving dinner, November 1968.

Other Links

Here are a few other articles I've found since writing this; the list isn't exhaustive:

- An general intro, like this write up

- PC Magazine did a quality comparison between different transfer technologies

- Telecine glossary

- Another user's experience

- My friend Dennis does a lot of 8mm and 16mm capture, though he doesn't use avisynth

- More of Dennis' work

Feedback

If you want to contact me for whatever reason, try me at jim@thebattles.net.